by Bob Glaser, UX Architect, Strategist, Voice and AI Interaction Design

Generative AI is best used when you need to quickly generate creative content, brainstorm ideas, automate repetitive writing tasks, or summarize large amounts of information. It’s particularly effective for drafting emails, creating marketing materials, composing code snippets, or even assisting with research and data analysis. These scenarios leverage AI’s ability to process and synthesize information efficiently, saving valuable time and effort.

However, generative AI shouldn’t be used when accuracy, confidentiality, or nuanced judgment are critical. Avoid relying solely on AI for sensitive legal documents, medical advice, or decisions that require deep understanding of context and ethics. Additionally, since AI models can sometimes produce incorrect or biased information, it’s important not to use them as the sole source for factual or high-stakes content. Always review and validate AI-generated material when accuracy truly matters.

These are important points there are several aspects that should be considered. There are many technologies that allow us to do things far quicker than doing them manually. However, sometimes speed is not the most effective way to produce the outcome.

Any new technology tends to fascinate and dazzle us with what it can do to the point where we are blinded by the fact of what it can’t do. This by no means indicates that this technology is bad. These advancements are genuinely astounding and extraordinarily useful.

This is not an issue with generative AI alone. This has been around for decades. When we produce applications for content, the speed at which we can do it often produces access or errors that go unnoticed. The faster we work, the less likely we are to review in detail the content that is produced. We become blinded or distracted by what it can do so much that we don’t see what it can’t do or sometimes what it is doing wrong. These types of problems are corrected over time but usually much slower than we expected.

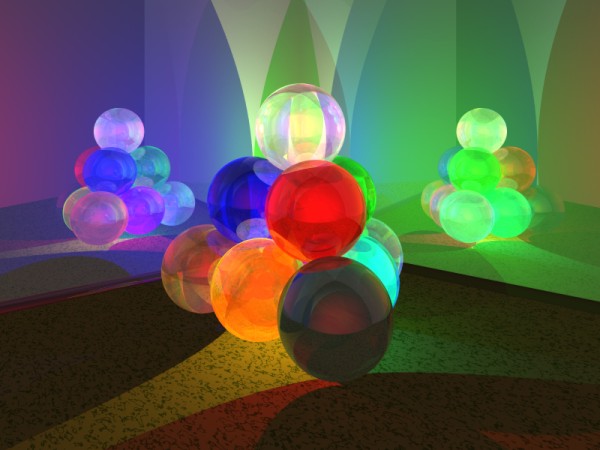

Take for instance some of the early examples pause computer graphics. Technologies like ray tracing were astounding at the time. The incredible jump in the quality of the images compared to previous graphics provided a sense of realism that seemed to be close to perfect lifelike realism. After a while, it became apparent that it was too perfect in some aspects and lacked something in others. Each time a new advancement was introduced, there was always this claim of “Now we have realism!” but there soon would be this realization that there was still something that seems missing. These anomalies of the real world are what provide a true sense of realism. Consider how quantum mechanics began to show an unpredictability that couldn’t be duplicated by existing math or physics.

These issues weren’t only related to rendering methods, but also modeling, mapping, and other aspects of the physical world that could often (but not always) be represented by the math of physics.

The point here is that when we are focused on these dazzling new technologies, we should always keep in mind it can blind us to its deficits.

Essentially it comes down to the difference between editing as you go versus editing after the fact. The latter is where the problem arises.

Sources

Since many LLMs were initially based on scraping the internet to create these massive databases, there wasn’t a way to determine if the information being gathered was fact or fiction, opinion or truth. The concept was to essentially mimic natural language use and structure. From that standpoint, it is very effective. Unfortunately, this made it function like a con-artist. It often tells us what we want to hear.

Now if you ask a question about something that is an area of your expertise, You can quickly assess the validity of the result in terms of both accuracy and thoroughness. You will see errors which are probably mostly minor, and occasionally some that are rather significant. This prediction approach is likely to be based on the frequency of use from the data that was “scraped” and therefore likely to produce the common answer but not necessarily the correct answer. Adding RAG to the LLM significantly improves the quality, accuracy, and timeliness of the response. However, if you don’t know what external sources are being accessed, then the issue of that accuracy can be called into question. Usually, these databases can be controlled to ensure accuracy when a company is only referencing only their own databases. Problems can still show up though, even in those cases.

For example, a hospital system may reference only its own diagnostic data systems. Where seemingly insignificant deficits of information can lead to problematic results. In the past, a weekly mortality and morbidity report might be brutal but exceptionally useful in learning and correcting errors moving forward. However, due to the significant increase in litigation and insurance issues in the past few decades, hospitals may alter the way they report these in order to protect themselves, financially, from lawsuits. In these cases, this “smoothed out” data is what is stored in their own databases. This now creates a small but still potentially dangerous result when their internal RAG LLM is providing diagnostic information or recommendations. There are always other checks in the diagnostic process to increase safety and accuracy of diagnoses and treatment, but a data point that seems correct can easily be overlooked resulting in a poor outcome. It is the black box nature of how LLMs process and produce results.

Self-referencing

A different issue is the problem of recursion due to unintentional self-referencing. If paper is being written using generative AI small unnoticeable errors can be introduced. As mentioned before, when people can increase the speed at which they can produce, then there is a natural tendency to proportionately reduce the review and correction period. This premature optimization is well researched by a number of organizations including the NIH.

The problem happens when the paper becomes part of the archives for a RAG LLM, the error is now reinforced by the system.

Other issues

Issues such as citing references and inadvertent plagiarism are additional unseen problems in the use of generative AI systems. These are compounded when self-referencing is added to the flow. Is it known that the source of information is from a peer reviewed paper that had been disproved based on

– a flawed data set

– a process that had been put in place that left out a human element that shifted the purely empirical data

– a methodology that was insufficient to the process

The aspect of citing references also introduces, in certain cases, the issue of rights and licensing. This is being addressed by some companies by ensuring that the sources for the libraries of data are all owned by or licensed to that company.

Moving forward

With ever larger and faster systems, there is much work being done to address many of these issues. About 9 years ago, I was working with smaller language models and one of the issues was the time-consuming aspect of scrubbing data and then retesting it. Any good UX designer knows that if you don’t spend more time working out details, task flows, observation, etc., at the beginning of a project then there are likely to be problems in the design of the UX that will take significantly longer to correct later.

Great strides are being made in AI. New concepts, methods, algorithms, as well as improvements in existing methods and models. We must always be aware that every seemingly big jump forward may not be as big in hindsight a few years later.